What feels most alive to me this month

Kalshi and Polymarket

Neither mainstream institutions nor their replacements in the current Trump administration are looking so hot these days. In that setting, prediction markets seemed like they might offer a better, unbiased, un-partisan, clear-eyed mirror into reality. But the top two prediction markets qua prediction markets, Kalshi and Polymarket, aren’t covering themselves in glory.

Kalshi is pushing gambling by another name, enabling Robinhood (a) and Webull (a) to do the same, and getting push-back from states (e.g., New Jersey (a)). It’s suing some of those states back (a). Kalshi also had a bad market resolution on Oscars viewership numbers (a).

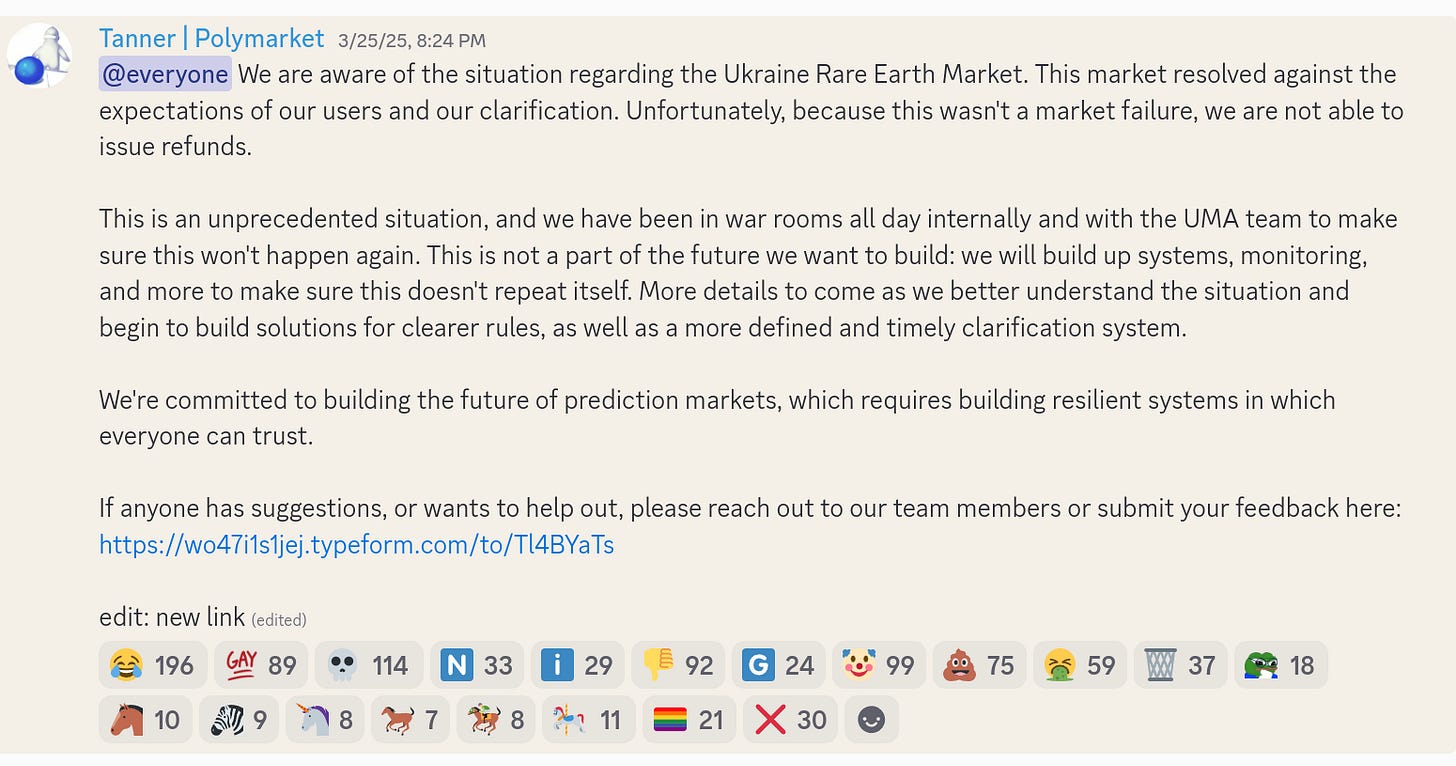

Kalshi never did have the mandate of heaven—the positive karma and good will that comes from being a prosocial actor in the scene it inhabits. Polymarket did at some point, but this month it reduced its positive karma through an incorrect resolution (a) and a subsequent very sloppy apology:

I basically don’t care at all about this individual market resolution per se. $7M in volume might be 700K to 5M in total value locked held at the end of the market, which isn’t that much compared to the 70M it previously raised, or to the volume in truly popular markets. If a fraction of markets resolves incorrectly, this isn’t so far that important in absolute terms. Partly this is because I’m pricing in that almost every resolution mechanism will have a certain failure rate, and Polymarket’s failure rate so far doesn’t seem that bad.

What I care about is the long-term health of the foresight space, and thus to a lesser extent the health of Polymarket. And this specific incident is informative in terms of the direction. The direction is sports betting and quickly drafted markets on the current thing, rather than a mechanism to uplift knowledge aggregation and improve decisions. Crucially, if Polymarket doesn’t reimburse users on an incorrect resolution, perhaps caused by a sloppy market specification, its incentives aren’t aligned with correct market resolution. There is no stick for a quickly fired market on a hot topic, only carrot.

On a positive note, here (a) is a dashboard tracking Polymarket accuracy, by @alexmccullaaa (a).

AI 2027

There is a new high-effort essay-slash-book hosted on its own domain, ai-2027.com (a). I think it’s pretty neat, but also that it underrates grain of truth problems (a)—I think there will be unknown unknowns that will make 2030 different from the author’s scenarios.

Authors of the AI 2027 reports are offering bets (a). I would encourage a) trying to go for size, b) using this legal template (a), and c) more generally trying to bet on doom rather than on individual benchmarks.

Anyhow, why do some projects discussing AI get smooth (a) presentations (a) and receive good publicity on the NYT (a) and Times magazine (a) while others rail on twitter (a) or remain on Tumblr (a)? Ideas that are better will get more adherents. But also some ideas will make their adherents want to spread them more, raise more funding, etc. From where I’m standing, there is a cluster of views, a kool-aid, that will get you showered with money, and criticism of those views is effortful and unrewarding. OpenAI has better lobbyists though.

Top forecasters who contributed to AI 2027—Eli Lifland, Tolga Bilge—are extremely bullish on AI doom & speed compared to the median top forecaster. My sense is that most top forecasters are not anticipating industrial-revolution-strength AI around the corner. You don’t get this scenario just by choosing top forecasters in other domains and extrapolating them to AI progress. That said, sampling those forecasters is hard, since forecaster who are more doomish will be differentially much more excited about participating in a project measuring AI risk.

A longer tail of events

I’m curious about how two seeds sprout: this futarchy fund (a) and this project to map how ideas spread (a).

Manifest 2025 is now live (a) June 6th to 8th, 2025, in California. I regretted not going in previous years, since I think I generally under-socialize but meeting people is a massive multiplier.

A particular forecasting technique (rolling windows) works better in “periods without structural changes”. The broader point might to reduce trust on lessons learnt from the 1990s to the late 2010s to now.

I thought it was interesting that in America, you can declare illegal income on your taxes (a)—but trying to do this is what got Al Capone imprisoned. The IRS will also now start to cooperate with law enforcement in the form of immigration enforcement (a), which could but might not yet set a wider precedent.

Sentinel (a) is still going on strong. This past month we paid particular attention to the rising chances (a) of a US strike on Iran.

Forecasting meetups are happening in Washington DC (a), Berlin (a), and NYC (a).

Augur is back (a). Truemarkets, a Polymarket competitor which had previously raised $4M, launched (a)

Disney is shutting down the 538 brand, but Nate Silver will continue publishing some polling aggregates (a).

Here (a) is a hook to integrate prediction markets with Uniswap pools. I’m not quite sure how it works.

AI startup Perplexity (a) is able to start doing Fermi estimates, but its results are still a bit off. I’m also monitoring mentions of fermi estimates on Twitter, because there aren’t that many a month, and I’ve noticed that Grok is appearing more frequently as well.

Interest rate dynamics are becoming a problem for prediction markets, with Eric Neyman looking at Will Jesus Christ return in an election year? (a).

DOGE moves to cancel NOAA leases (a). So far NOAA is still publishing coastal level sea forecasts (a).

An academic looks at forecasting future fraud (a), by looking at Benford’s law and at increasing accounting adjustments

Japan to Classify Crypto as Financial Products, Impose Insider Trading Restrictions (a). Part of the point of crypto is that states don’t have that much power to do this effectively. But they might still try.

Cultivate Labs released ARC (a), a research companion built on their experience interacting with analysts through their forecasting platforms.

Metaculus has a new forecasting tournament on Trump outcomes (a) with a 15K prize pool

An article looking at the limits of futarchy (a). It seems like projects might converge on a two-tier approach, where futarchy makes high level decisions but delegates implementation details to some other process, due to the nature of the firm (a) dynamics.

Kalshi pissed off the Indian reservations (a) by infringing on their gambling turf. If Kalshi’s strategy to call sports gambling a “prediction market” works, DraftKings might try the same loophole as Kalshi (a). So might Sports Illustrated

for example, with two dice, it is equally likely to throw twelve points, than to throw eleven; because one or the other, can be done in only one manner

This newsletter is sponsored by the Open Philanthropy Foundation.

The Leibniz bit was super interesting. I'll remember it next time I make a mistake...

Leibniz was 67 when he wrote that letter. Might he had fallen prey of the retired physicist syndrome? https://www.smbc-comics.com/index.php?db=comics&id=2556

(by comparison, he was in his 20s-30s when developing calculus).

Elon comes to mind, not sure why...

> Kalshi also had a bad market resolution

Kalshi's 2025 Champions League Winner event alone had 3 incorrectly settled markets (Feyenoord, Club Brugge, and PSV Eindhoven all prematurely settled NO in the middle of 2-game series):

https://web.archive.org/web/20250306023200/https://kalshi.com/markets/kxuefacl/uefa-champions-league

and Kalshi settled the #1 and #2 US iPhone apps to the same app at the same time:

https://kalshi.com/markets/kxapprankfree/free-app-ranking#kxapprankfree-25jun15

https://kalshi.com/markets/kxapprankfree2/free-app-ranking#kxapprankfree2-25jun15